By Airon Rodrigues

What’s more exciting than a newly launched website?

In a previous blog post on Site Structure, we discussed the importance of a foundation for a website. As an analogy, picture asking a builder to build a new home without any drawings or set plans. What results would you expect?

From an SEO perspective, there are critical factors involved in setting up a website so that it’s SEO-friendly.

Here’s an SEO checklist of what needs to be done directly after launching a site:

301 Redirect Mapping

When in the process of launching a new website, redirect mapping is a critical box to tick off on the checklist.

Redirect mapping is a vital part because, without a 301-redirect strategy, the website redesign can ultimately lose traffic. Without 301 redirects, all the new URLs will have to work from the ground up to rank in natural search.

A 301-redirect strategy should be a part of your timeline at the very beginning of any website redesign. After the site is launched, the most important thing you need to do is to test the 301 redirects.

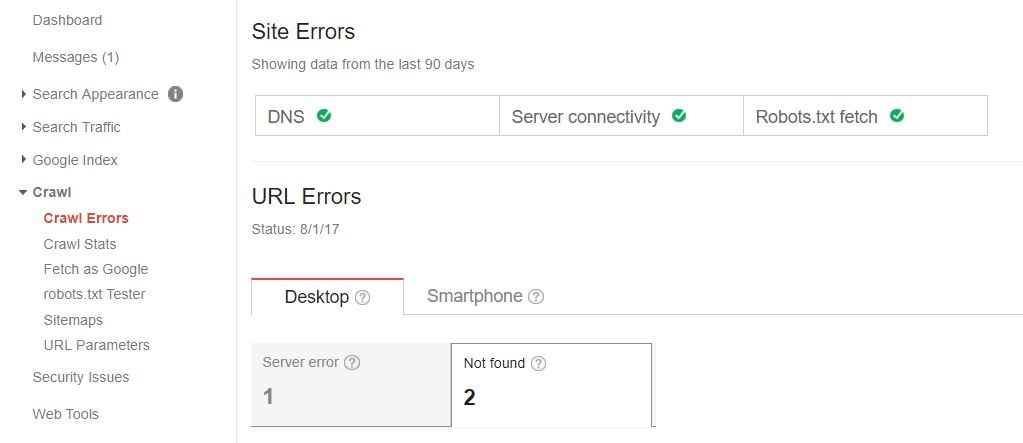

The simplest way to check for problematic redirects is to use Google Webmaster Tools.

- First, log in to Google Webmaster Tools and select your site

- Click Crawl → Crawl Errors

- In the display, click “Not Found”

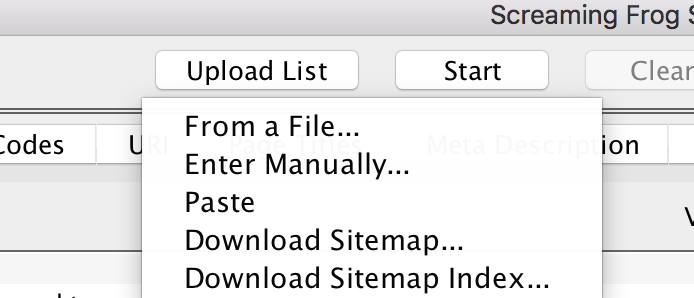

To review all the 301 redirects at once, Screaming Frog provides an option to ‘Enter Manually’ where all the URL’s can be inserted and tracked.

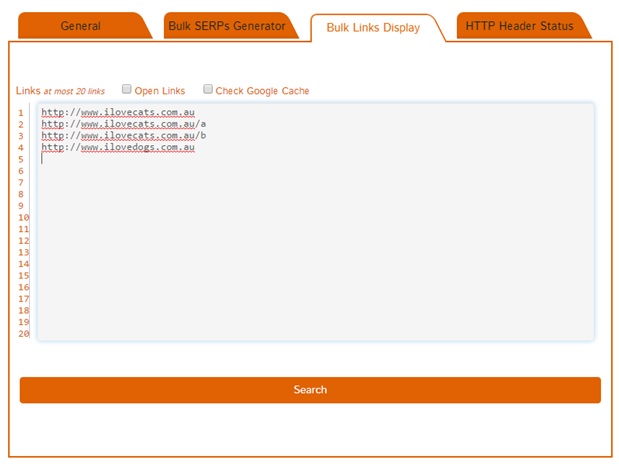

In addition, the Fenix SEO Extension supports a ‘Bulk Display’ Option that can manage 20 URL’s at once. This is another efficient way of checking if the new 301 redirects are working.

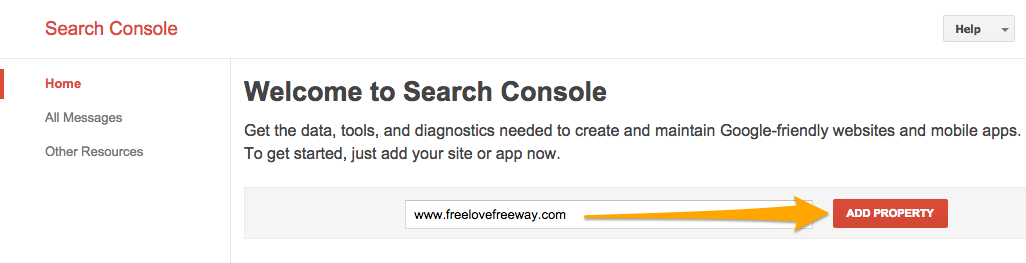

Set up Google Search Console

Setting up Google Search Console (GSC) is arguably one of the most important (if not the most important) steps of setting up a website for SEO.

Essentially, GSC monitors the performance of a website and provides key insights into how Google crawls analyses and indexes pages.

GSC provides insights into crawling errors, HTML improvements, and sitemap indexation. A key part to GSC is Search Appearance, and as the name suggests, it reports how a website is presented within the search results. The appearance of a website can be influenced by many factors, with many of them appearing within HTML Improvements. For a newly launched website, this information is key.

If a page supports Javascript frameworks such as AngularJS and ReactJS, it may be more valuable to install GSC during the staging process if you want to see how Google renders the pages if indexability is going to be an issue.

Set up Google Analytics

Equally as important, Google Analytics is a free website analytics service supported by Google that provides insights into how users find and use a website. This tool reports on website performance, providing valuable data consisting of site visits, page views, bounce rate, average time on site, pages per visit and percentage of new visits. This data is available to analyse on a day-to-day, month-to-month and year-to-year perspective.

As one of the main features, Google Analytics can track various traffic types such as the search engine, direct visits, website referrals and marketing campaigns. This is beneficial because the ability to identify the most responsive traffic channels makes for improving marketing strategies and understanding what the target audience responds to.

In addition to Google Analytics, Google Tag Manager is a tool that helps manage tags, or the snippets of JavaScript that send information to third-parties, on your website or mobile app. When set-up with GA, GTM will save time, add scalability to your site implementation and create customized tags.

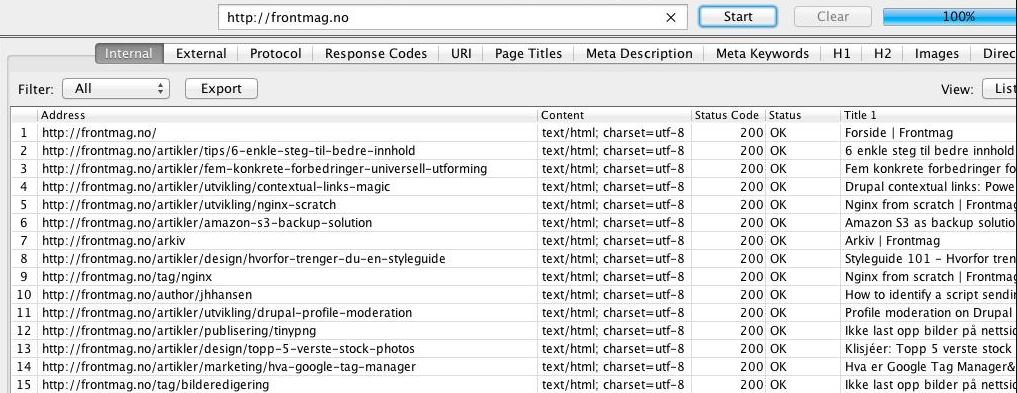

Download Screaming Frog and Perform a Site Crawl

Screaming Frog allows you to crawl websites’ URLs and identify key on site elements to analyse onsite SEO. When you have plugged in the URL you want to crawl, you can analyse links, CSS, images and all on-site SEO factors such as meta tags and onsite content. The Screaming Frog support pages give clear guidelines on how to get started when crawling a website.

In addition, Screaming Frog reports back the status code of each URL, as well as those that contain 302 or 404 errors. This tool is available for download on PC or Mac.

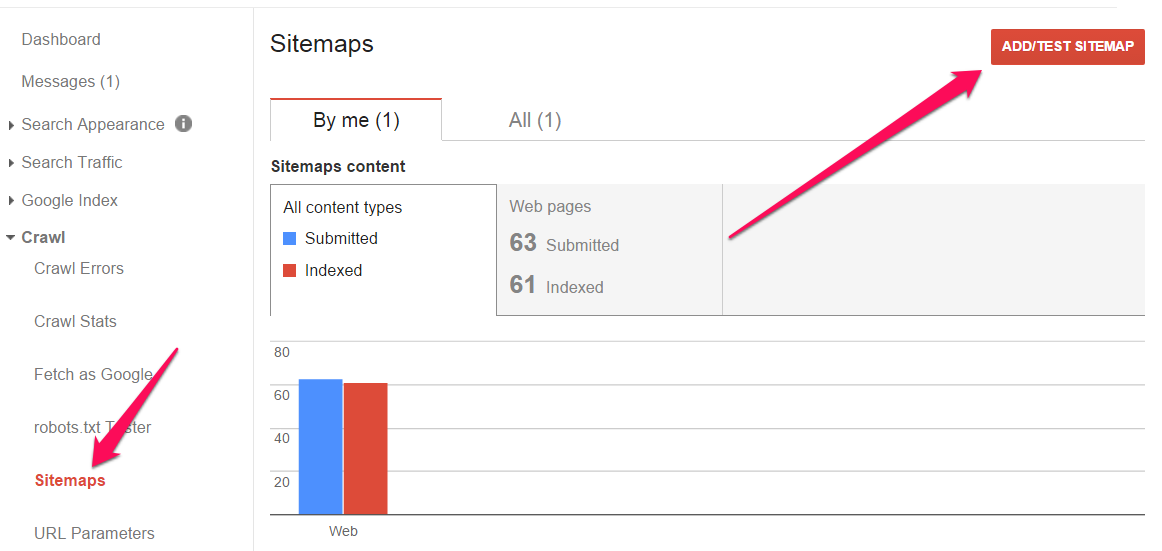

Review Your Sitemap

Sitemaps are extremely important. A sitemap directly communicates with search engines, reporting on the structure of a website. A sitemap also allows search engines to quickly, and successfully crawl a site.

What are some ways to monitor and review a sitemap?

- Current Status. What’s the status of a sitemap? Has it been regularly updated? Or does it still contain old URL’s that are no longer apart of the website? An XML sitemap should always be updated whenever new content is added to a site.

- Current Condition. Is the sitemap clean? Does it contain 4XX pages, non-canonical pages, redirected URLs, and pages blocked from indexing? If so, these pages need to be removed or fixed. The status of a sitemap can be checked for errors in Google Search Console, under Crawl > Sitemaps.

- Current Size. XML formats limit a single sitemap to 50,000 URLs. If the file contains more URLs, the list will have to be broken down into multiple sitemaps. Ideally, a sitemap should air on the side of brevity, if a sitemap has been kept at a small size, the more important pages will get crawled more frequently.

Review Your Robots.txt File

The robots.txt file is a text file that tells search engines which pages on your site to crawl and not to crawl.

The first thing a search engine spider like Googlebot looks at when it is visiting a page is the robots.txt file. Googlebot will do this to know if it has permission to access that page or file, which is why setting up a robots.txt file is crucial.

Here are some common robots.txt file set-ups:

All robots.txt instructions result in one of the following three outcomes

- Full allow: All content may be crawled.

- Full disallow: No content may be crawled.

- Conditional allow: The directives in the robots.txt determine the ability to crawl certain content.

Unsure if a website contains a robots.txt file?

Just add “/robots.txt” to the end of a domain name as shown below.

www.yourwebsite.com/robots.txt

Test and Improve Page Speed

Page speed is one of the key ranking signals for Google in 2017.

As a guideline, we recommend that desktop page speed should be no more than 3 seconds, and mobile page speed should be no more than 2 seconds.

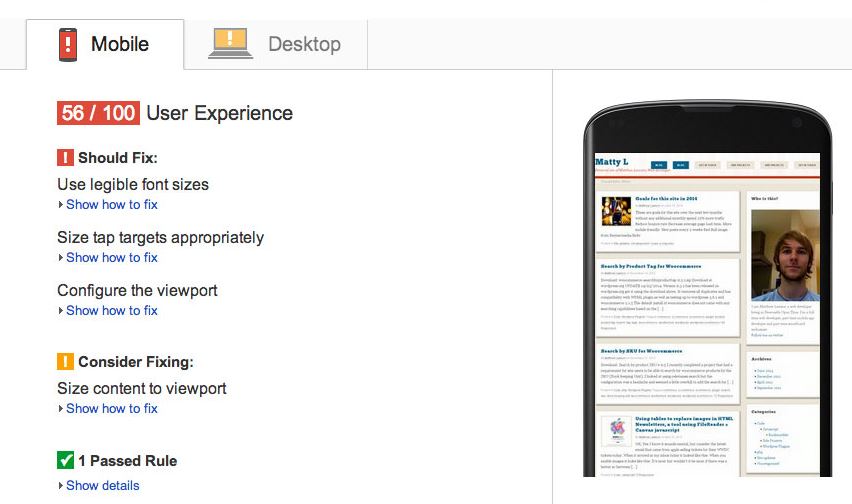

PageSpeed Insights can identify ways to make a site faster and more mobile-friendly, which is extremely important for a newly launched website.

If a page doesn’t pass some of the aspects of the test, Google will provide how-to-fix recommendations, as seen below:

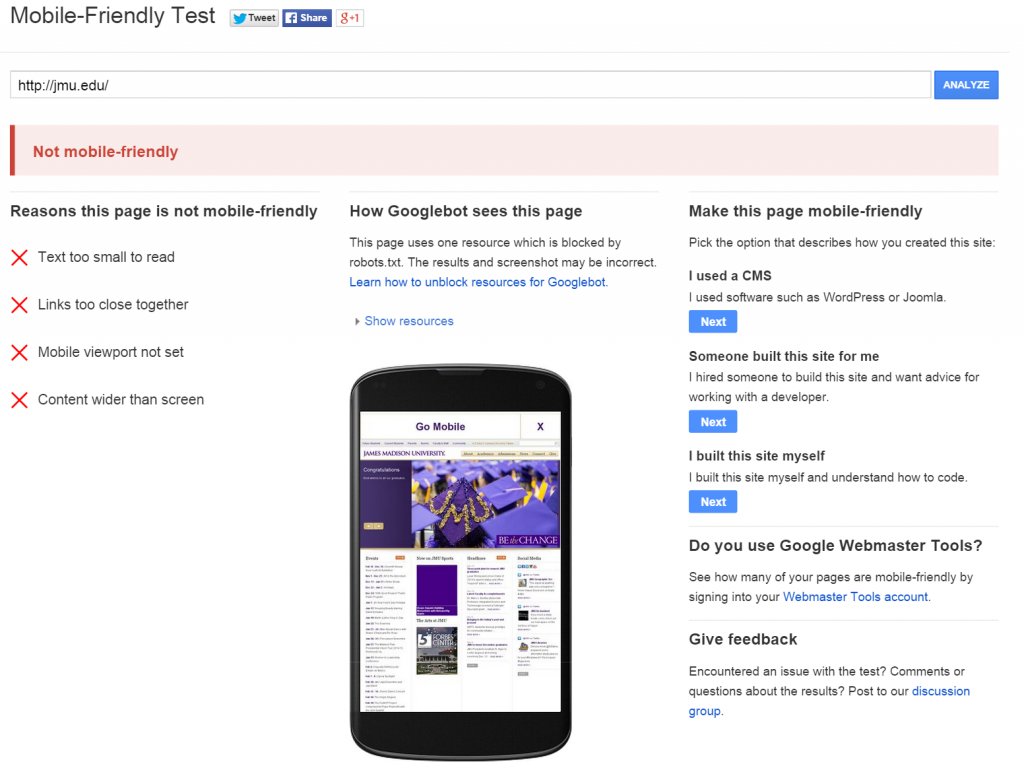

Ensure the Website Is Mobile Friendly

One of the top 3 SEO trends for 2017 is mobile. A mobile-friendly website is not only about the design and how the layout is displayed on the device, but also about the speed.

One of the ways to highly impact mobile speed is through AMP. With Accelerated Mobile projects, users are becoming accustomed to websites loading faster, so using mobile speed tools such as Google’s Mobile Friendly Test and WebPageTest is highly important for any new website. These tools will check to see if your website loads well on mobile devices and give you tips on what to fix if it doesn’t.

Ensuring the steps mentioned have been implemented is crucial for any new website. These steps will help create an easier process for the basis of any SEO stage moving forward.